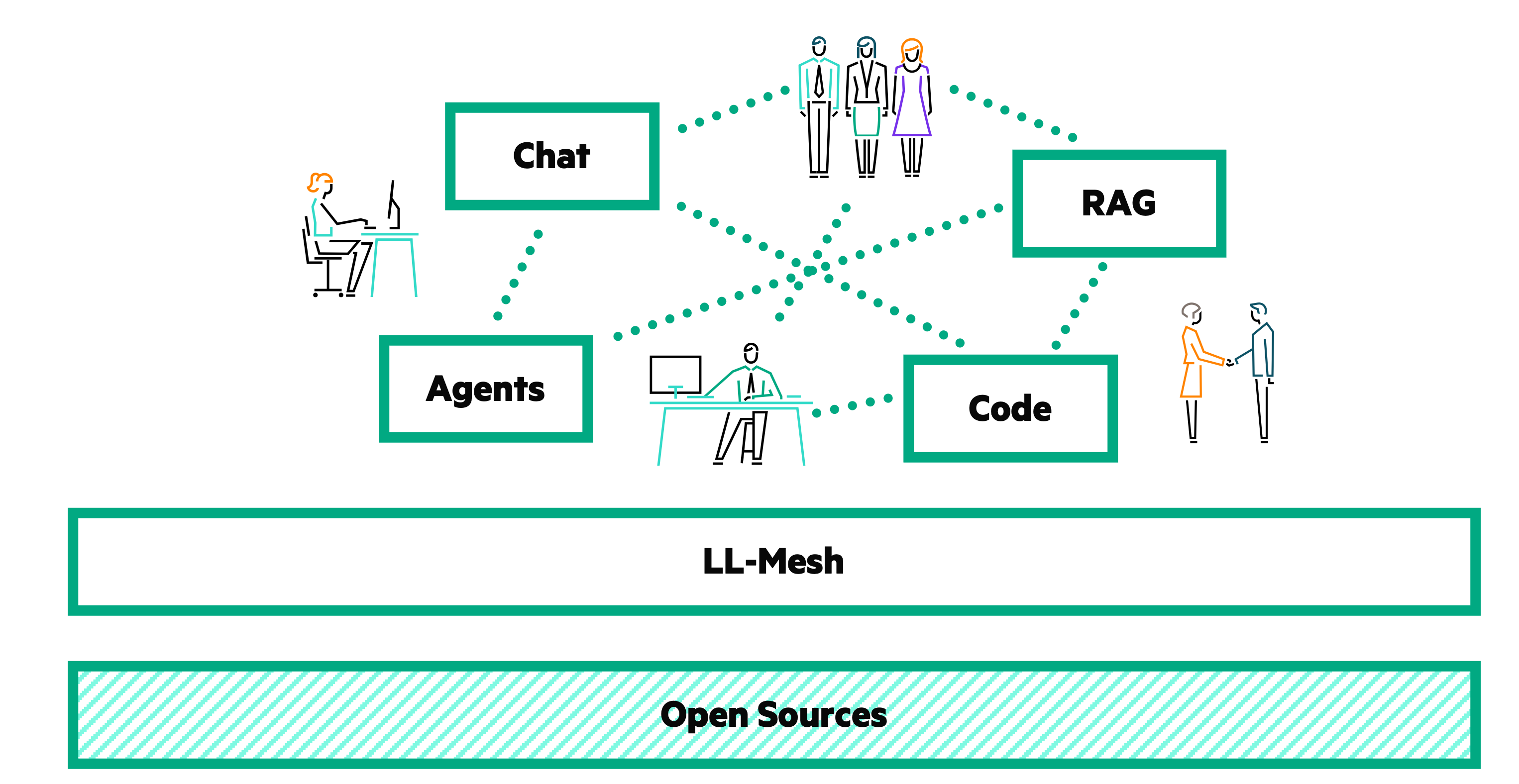

In my previous blog posts, I dolve into the Chat Service, Agent Service, and RAG Service of LLM Agentic Tool Mesh open source project. Today, I'll explore the system services of LLM Agentic Tool Mesh, which are essential for managing and orchestrating the mesh of agentic tools.

I'll provide insights into these services, showcase an example of a Mesh available in the repository, discuss federated governance, and share our vision for the future evolution of the LLM Agentic Tool Mesh project.

Understanding the system services

The system services in LLM Agentic Tool Mesh are crucial for the seamless operation and orchestration of agentic tools and web applications. These services ensure consistency, ease of use, and flexibility across the platform. They include:

- Tool Client Service

- Tool Server Service

Let's explore each of these components in detail.

Tool Client Service

The Tool Client Service enables developers to transform any code function into an LLM Agentic Tool Mesh tool by applying a simple decorator. This service abstracts the complexities of tool integration, allowing for quick conversion of functions into reusable tools within the LLM Agentic Tool Mesh ecosystem.

Key features:

- Decorator-based: Convert functions into tools using the

@AthonTooldecorator - Seamless integration: Decorated functions are fully integrated into the LLM Agentic Tool Mesh platform

Example usage:

from athon.system import AthonTool, Logger config = { "greetings": "Hello World! Welcome, " } logger = Logger().get_logger() @AthonTool(config, logger) def hello_world(query: str) -> str: """Greets the user.""" greeting_message = f"{config['greetings']} {query}!" return greeting_message.capitalize()

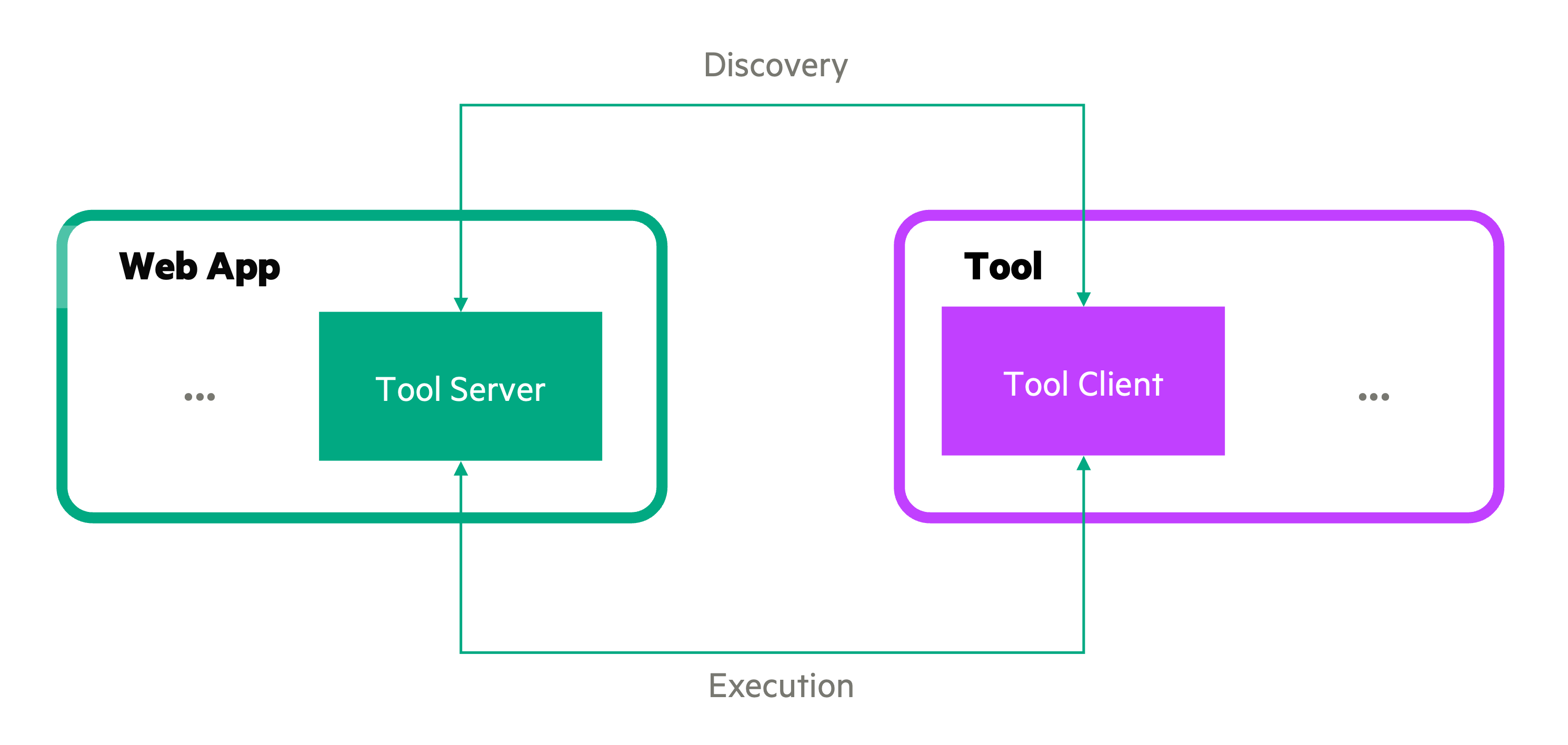

Tool Server Service

The Tool Server Service provides the necessary infrastructure to manage and run LLM Agentic Tool Mesh tools on the platform. It includes capabilities for tool discovery and execution, ensuring that tools are easily accessible and efficiently managed.

Key features:

- Tool discovery: Automatically discover tools within the platform

- Execution management: Manage the execution of tools, ensuring efficient operation

Example usage:

from athon.agents import ToolRepository from athon.system import ToolDiscovery projects_config = [ { "name": "Simple Project", "tools": [ "examples/local_tool", # A local tool path "https://127.0.0.1:5003/", # A remote tool URL ] } ] tools_config = { "type": "LangChainStructured" } def discover_and_load_tools(projects_config, tools_config): tool_repository = ToolRepository.create(tools_config) tool_discovery = ToolDiscovery() tool_id_counter = 1 for project in projects_config: for tool_reference in project["tools"]: tool_info = tool_discovery.discover_tool(tool_reference) if tool_info: tool_metadata = { "id": tool_id_counter, "project": project["name"], "name": tool_info["name"], "interface": tool_info.get("interface") } tool_repository.add_tool(tool_info["tool"], tool_metadata) tool_id_counter += 1 return tool_repository # Run the tool discovery and loading process tool_repository = discover_and_load_tools(projects_config, tools_config) # Display the discovered tools for tool in tool_repository.get_tools().tools: print(f"Discovered tool: {tool['name']} from project: {tool['metadata']['project']}")

Building a mesh of LLM Agentic Tools

We have developed a series of web applications and tools, complete with examples, to demonstrate the capabilities of LLM Agentic Tool Mesh in our GitHub repo.

Web applications:

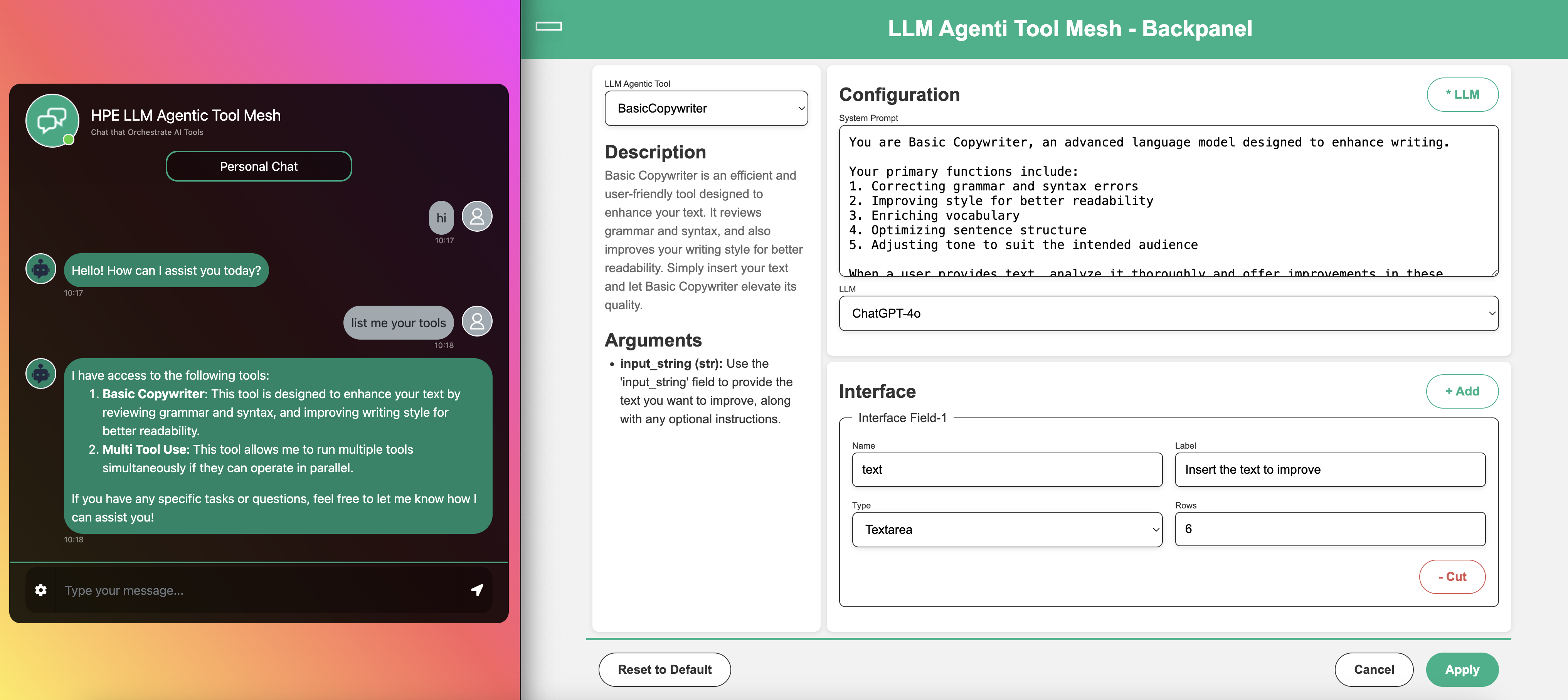

- Chatbot (

examples/app_chatbot): This is a chatbot capable of reasoning and invoking appropriate LLM tools to perform specific actions. You can configure the chatbot using files that define LLM Agentic Tool Mesh platform services, project settings, toolkits, and memory configurations. The web app orchestrates both local and remote LLM tools, allowing them to define their own HTML interfaces, supporting text, images, and code presentations. - Admin panel (

examples/app_backpanel): There's an admin panel that enables the configuration of basic LLM tools to perform actions via LLM calls. It allows you to set the system prompt, select the LLM model, and define the LLM tool interface, simplifying the process of configuring LLM tool interfaces.

Tools:

- Basic copywriter (

examples/tool_copywriter): This tool rewrites text, providing explanations for enhancements and changes. - Temperature finder (

examples/tool_api): This tool fetches and displays the current temperature for a specified location by utilizing a public API. - Temperature analyzer (

examples/tool_analyzer): Another tools generates code, using a language model to analyze historical temperature data and create visual charts for better understanding - Telco expert (

examples/tool_rag): The RAG tool provides quick and accurate access to 5G specifications. - OpenAPI manager (

examples/tool_agents): This multi-agent tool reads OpenAPI documentation and provides users with relevant information based on their queries.

Running the examples

You can run the tools and web applications individually or use the provided run_examples.sh script to run them all together. Once everything is started:

- Access the chatbot at

https://127.0.0.1:5001/ - Access the admin panel at

https://127.0.0.1:5011/

Federated governance and standards

In the LLM Agentic Tool Mesh platform, the management of LLM tools is decentralized, promoting flexibility and innovation. To ensure this decentralization does not compromise the platform's integrity, LLM Agentic Tool Mesh implements a unified framework of governance policies and standards.

Key principles:

- Interoperable standards: Ensures all tools and services work together seamlessly while adhering to best practices

- Ethical compliance: Emphasizes minimizing biases, ensuring fairness, and upholding ethical principles across all AI tools and models

- Security and privacy: Maintains rigorous standards to protect data and ensure compliance with privacy regulations.

- Continuous improvement: Encourages feedback and collaboration to refine governance practices

- Automated governance: Plans to extend code quality checks to enforce governance policies, ensuring comprehensive compliance across the platform

LLM Agentic Tool Mesh includes a dedicated repository containing text files that outline various policies and standards (federated_governance/). These documents cover essential areas such as LLM model usage, RAG processes, and more.

Vision for LLM Agentic Tool Mesh project evolution

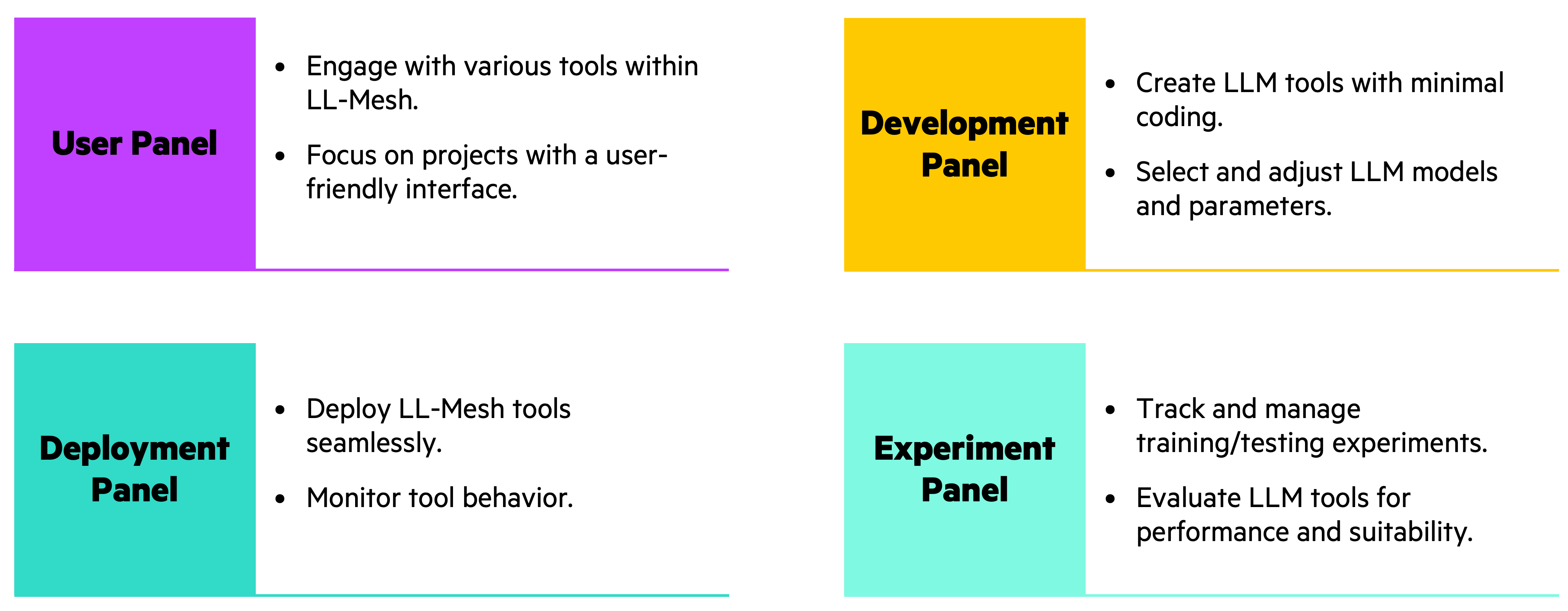

The LLM Agentic Tool Mesh platform is evolving into a comprehensive solution, providing several panel views tailored for various stages of the tool and application lifecycle.

When creating a new web app, you can build upon the existing examples. With all services fully parameterized, there is unparalleled flexibility to design diverse user experience panels. For instance, current examples include a chatbot as a user interface and an admin panel for configuring an LLM tool. Additionally, web apps can be developed to support deployment tasks or facilitate experiments aimed at optimizing service parameters for specific objectives.

Currently, the platform provides a user panel and a dvelopment panel.

User panel:

- Implemented features: This panel focuses on engaging with tools like Chat, RAG, and Agent services. It provides a user-friendly interface for interacting with these capabilities.

- Future goals: We plan to enrich the existing services, offering an even more seamless and feature-rich experience for end-users.

Development panel:

- Implemented features: This panel has been partially tackled with the backpanel web app example, which allows users to runtime modify the basic copywriter agentic tool and the RAG tool.

- Future goals: We aim to add more system services to support development, including real-time LLM tuning and configuration.

In the future, we hope to be able to offer a deployment panel and an experiment panel.

Deployment panel (future):

- Purpose: This panel will focus on deploying the LLM Agentic Tool Mesh tools seamlessly across one or more clusters, enabling large-scale and distributed deployments.

- Planned features: We hope to offer tools for monitoring deployed tools, orchestrating distributed systems, and managing deployment pipelines.

Experiment Panel (Future):

- Purpose: This panel will be designed to track and manage experiments to optimize LLM tool performance and suitability.

- Planned features: This panel will allow users to try different configurations and compare outcomes, helping teams evaluate the most effective settings for their use cases.

Our mission ahead

As LLM Agentic Tool Mesh evolves, we aim to continue enhancing the platform by enriching existing services, especially in the user panel, and expanding the development panel with more robust system services. The addition of the deployment panel and experiment panel will complete the platform's vision, enabling a fully integrated lifecycle from development to deployment and optimization.

Stay tuned as we advance toward democratizing Gen AI with a comprehensive, flexible, and user-centric platform!

Related

HPE Athonet LLM Platform: Divide et impera, designing the LLM Agentic Tool Mesh

Apr 9, 2024

HPE Athonet LLM Platform: Driving Users towards peak 'Flow' efficiency

Mar 20, 2024

HPE Athonet LLM Platform: From personal assistant to collaborative corporate tool

Mar 13, 2024

LLM Agentic Tool Mesh: Democratizing Gen AI through open source innovation

Nov 11, 2024

LLM Agentic Tool Mesh: Exploring chat service and factory design pattern

Nov 21, 2024

LLM Agentic Tool Mesh: Empowering Gen AI with Retrieval-Augmented Generation (RAG)

Jan 8, 2025

LLM Agentic Tool Mesh: Harnessing agent services and multi-agent AI for next-level Gen AI

Dec 12, 2024