In a recent series of blog posts, I've explored various facets of generative AI and its profound impact on telecommunications and corporate tools. HPE Athonet's revolutionary approaches in the digital landscape were highlighted in these discussions:

- "The transformative impact of generative AI on Telco products" discussed the pivotal role of AI in enhancing product offerings.

- "HPE Athonet LLM Platform: From personal assistant to collaborative corporate tool" highlighted the evolution of the platform into a powerful collaborative suite.

- “HPE Athonet LLM Platform: Driving users towards peak 'Flow' efficiency focused on optimizing user efficiency and engagement.

These insights set the stage for a profound transformation in AI centered around:

- Transitioning to a team-focused collaborative framework.

- Enhancing user experience to boost productivity and focus.

- Building a resilient infrastructure based on data mesh principles, ensuring robust security and adherence to ethical standards.

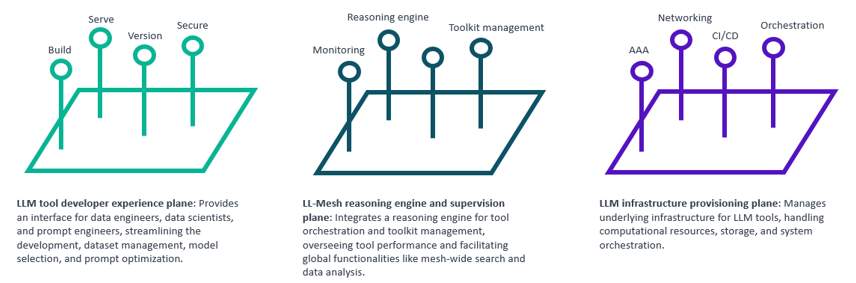

Today, the discussion delves deeper into the third pillar inspired by the Data Mesh concepts detailed in the article “Data Mesh Principles and Logical Architecture” Here I introduce the "LL-Mesh" to create a clean, scalable architecture that can swiftly adapt to the rapid advancements in technology . This tailored approach adapts the Data Mesh framework to the unique needs of LLMs, merging data, context, and reasoning capabilities into a cohesive whole.

The LL-Mesh embodies the data itself and includes reasoning capabilities, orchestrating tools enhanced with LLM technology. Developers using this platform take charge of everything from the underlying data to the APIs and documentation, ensuring a seamless experience for end-users. This initiative is more than a technical upgrade—it’s a significant leap towards digital transformation that promises substantial competitive advantage through enhanced knowledge sharing within the company that adopts it, leading to the customization of products and services tailored specifically for customers.

However, there are some challenges, including the need to master new generative AI technologies, the importance of managing data properly by adopting the 'data as code' concept, and the cultural shift required to maintain agility and continuously improve all company processes.

Expecting every team tasked with developing a tool to start from scratch is impractical. Instead, it's crucial to offer a supportive platform that delivers the essential services needed for creation, complemented by a federated governance structure that lays out clear rules and guidelines. In the sections that follow, these principles are explored more in detail, alongside additional insights to guide the development process.

Domain-specific LLM tool ownership and decentralization

In the LL-Mesh framework, ownership of LLM tools is strategically distributed across various teams or departments, with each group taking full responsibility for their respective tool's development, maintenance, and quality assurance. This organizational structure aligns with modern enterprise setups, which are typically divided into specific business domains. By applying LL-Mesh principles along these natural divisions, tools are finely tuned to meet the unique requirements of each domain, such as customer service or research and development.

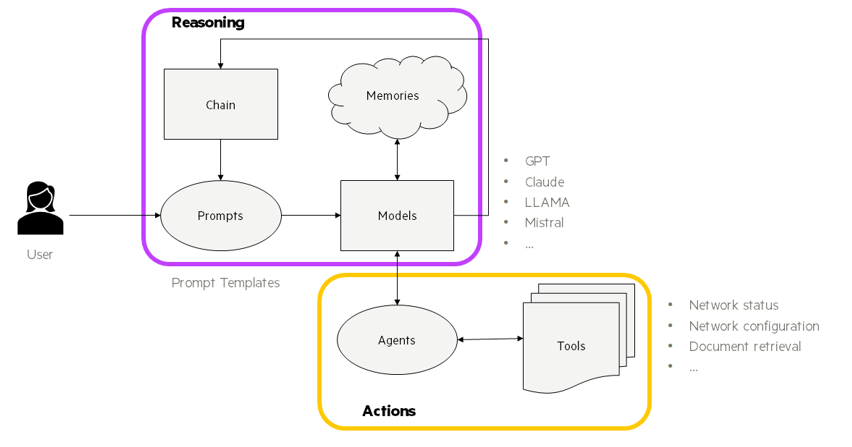

Central to LL-Mesh are data and prompts, metadata, and models, all supported by the necessary computational power to process and deliver results efficiently. This architecture not only facilitates streamlined data management but also significantly enhances the model’s capability to learn and adapt. By aligning the decomposition of tools with the business domains, LL-Mesh ensures that each tool operates within well-defined operational parameters, optimizing both performance and adaptability.

LLM tool as product

Each LLM tool is crafted as a specialized product, meticulously designed with the end-user in mind to ensure user-centricity, efficiency, and effectiveness. This philosophy treats each tool as a comprehensive solution that spans from data generation to consumption, emphasizing a seamless and integrated experience. It is crucial that a dedicated team, including a product owner, is responsible for managing the tool end-to-end, ensuring that all stages—from using documents for information analysis with LLMs to presenting results—are harmoniously interconnected. This holistic approach not only enhances the functionality of each phase but also significantly boosts the overall quality of the tool.

The principle of viewing an LLM tool as a product also tackles the persistent issue of data silos. Like data products, there should be a system in place that enables the use of multiple LLM tools in concert. It is essential for these tools to provide clear interfaces and capabilities, including discoverability, security, understandability, and trustworthiness. This ensures that tools not only function independently but also work cohesively within a larger ecosystem, enhancing interoperability and user trust.

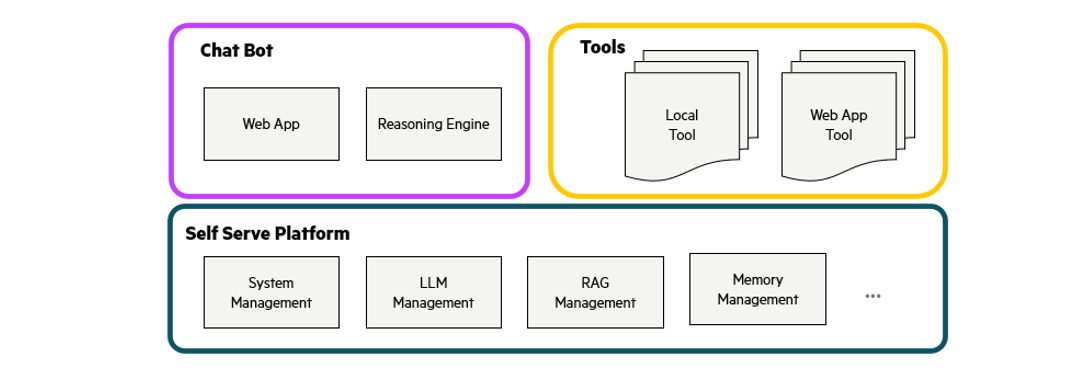

LLM platform infrastructure

A self-service infrastructure platform is provided with the tool, enabling teams to autonomously develop, deploy, and manage their LLM tools. This platform supports the necessary frameworks to promote autonomy and innovation. Envisioned as LL-Mesh, it extends capabilities beyond enhancing user experience for chatbot users to include interfaces for various stakeholders, including document owners, tool developers, and deployment teams. This requires the development of diverse user interfaces tailored to different interactions within the ecosystem.

At MWC 2024 (Mobile World Congress), a proof of concept demonstrated the robustness of this architecture by showcasing a chatbot capable of supporting multiple tools through the self-service capabilities of the LLM platform. This facilitates the development and integration of both individual tools and comprehensive chatbot solutions.

The architecture leverages a reasoning engine where the LLM serves as a central hub, orchestrating various tools to ensure streamlined communication and functionality. Integrating a tool into this engine is straightforward: simply define the tool and its arguments in a prompt, and the LLM will intelligently determine when to engage the tool based on user requests.

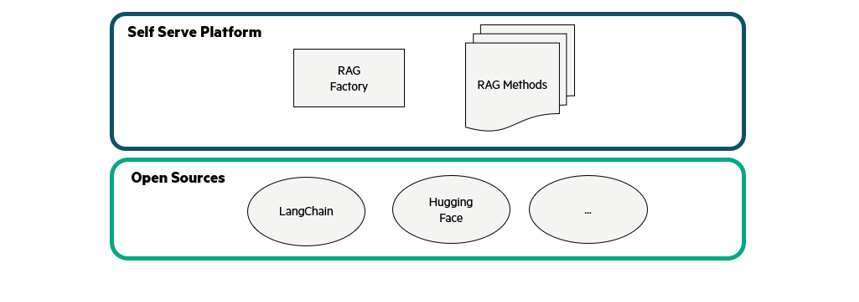

Designed to be both extendable and modular, each component of the platform employs design patterns such as the factory method and strategy pattern. These patterns ensure a decoupling of creation from usage, providing a flexible and scalable architecture that is easy to maintain. Each module operates independently yet conforms to a unified global interface, enhancing system integrity and adaptability. The self-serve platform contains the following features:

- Utility functions simplify the complexity of underlying libraries, such as LangChain, Hugging Face, and LLaMA Index

- Domain-specific high-level functions, for example, parsing telecommunications standards using Retrieval-Augmented Generation (RAG).

Federated governance and standards

While the management of LLM tools is decentralized, a unified framework of governance policies and standards is crucial to ensure consistency, ethical integrity, and overall quality across the platform. Federated governance effectively balances the drive for innovation with rigorous standards for security, privacy, and compliance, which are vital to the operation of the LL-Mesh. This approach encompasses several key elements:

- Decentralized autonomy: Domain-specific teams are empowered to manage their LLM tools, fostering a sense of ownership and responsiveness to specific needs, all while aligning with overarching governance standards of the mesh.

- Interoperable standards: Common guidelines for security, data privacy, and model behavior are established to ensure all interactions are helpful and harmless, promoting seamless and safe integration across different tools and domains.

- Ethical compliance: It is critical that all LLMs operate within ethical guidelines and regulatory frameworks to foster trust and reliability. This commitment to ethical compliance supports honest interactions and adherence to broader social and legal standards.

- Central oversight: A centralized governance body is tasked with enforcing these policies across the mesh, ensuring that all components adhere to responsible AI principles and maintain a high standard of integrity and accountability.

- Automated governance tools: Advanced technologies are deployed to automate the enforcement of governance standards, ensuring consistent compliance with the principles of being helpful, harmless, and honest.

- Collaboration and feedback: Continuous collaboration and feedback mechanisms within the LL-Mesh are crucial for adapting and refining governance practices. This open dialogue encourages ongoing improvement and alignment with emerging technologies and challenges.

By embracing these principles, the LL-Mesh seeks to cultivate a robust, ethical, and dynamic ecosystem for the development and deployment of LLM tools. This approach ensures that all tools not only add significant value but also meet stringent standards of responsibility and governance. These efforts underscore HPE Athonet's commitment to driving innovation and digital transformation in network management by developing a responsible framework and product.

Related

HPE Athonet LLM Platform: Driving Users towards peak 'Flow' efficiency

Mar 20, 2024

HPE Athonet LLM Platform: From personal assistant to collaborative corporate tool

Mar 13, 2024